Future Convenience Store Challenge

- Vision

The vision of the Happy Robot team is “Making the World Happy by Making a Kawaii Robot.” “Kawaii” means cute, lovely, or charming. We think that the kawaii robot can solve problems of an aging society and make environments of convenience stores happier for customers in Japan. Happy Mini as shown in Fig.2 is designed in the image of a little child with a lively yellow color.[1]. The software system is based on ROS, and the state of arts frame works such as Caffe, Darknet, Digits, Hark, Kaldi and so on.

The Happy Robot team has been participating in the RoboCup@Home league of the RoboCup Japan Open since 2012, and participated in the RoboCup 2015, 2016, 2017 and 2018 world competition. The team got the 9th, 8th, 9th, and 5 places in the competition, respectively.

- Video explaining the system outline

-

- Stocking and Disposing Task

-

- Customer Interaction Task

-

- Restroom Cleaning Task

-

- Team

- Adviser: Kosei Demura

- Member: Yamato Fujibayashi (Leader), Syuhei Mashimo, Minami Kawaguchi, Yoshiki Murai, Ranka Mori

- Our Happy Robots

-

- Happy Mini

Fig.2 Happy Mini

Height [m] 0.93~1.16 Width [m] 0.35 Length [m] 0.38 Weight [kg] 9.5 Max speed [m/s] 0.7 Arm D.O.F 4 Base Kobuki, Yujin Computer Jetson Xavier, Nvidia Lidar UTM-30LX, Hokuyo RGB-D Sensor RealSense D435, Intel - Happy Burger

Height [m] 0.5 Width [m] 0.5 Length [m] 0.5 Weight [kg] 8.0 Max speed [m/s] 0.5 Arm D.O.F 4 Base Roomba 890, iRobot Arm Mikata, Robotis Computer Alienware 15, Dell Lidar UTM-30LX, Hokuyo RGB-D SensorRealSense D435, Intel

- Happy Mini

-

- Happy Truck

Size 500[mm]x1000[mm]x1500[mm] Weight 20 [kg] Power Source Li-ion 11.2 [Ah] Power Unit Joy Unit X, Yamaha Arm 5 DOfs Computer Alienware 17, Dell GPU GTX 1070, NVIDIA Lidar UTM-30LX, Hokuyo RGB-D Sensor RealSense D435, Intel Tracking Camera RealSense T265, Intel Omni Camera PIXPRO SP360 4K (Kodak)

- Happy Truck

-

-

-

- Hardware

-

- MIKATA Arm

One of our Happy Robots uses the MIKATA arm, the Open Source Manipulator by DYNAMIXEL.INC. We customized MIKATA arm to increase the payload. The customization is double motor and gravity compensation. - FingerVision

FingerVision is a vision-based tactile sensor(Optical Skin) for robot hands, developed by Akihiko Yamaguchi and Christopher Atkeson. Yamaguchi is a joint researcher of the Happy Robot team. It has many functions such as contact force distribution, proximity vision: slip, deformation, and object pose estimation.

Using the FingerVision, a robot can grasp many kinds of object, i.e., a soft object, a fragile object, a slippery object and so on. Moreover, it is easy to manufacture, cheap (one sensor is almost $50), and physically robust.

- MIKATA Arm

-

- Object Detection, Recognition & Manipulation

We develop a cascade deep neural network of YOLO (You Only Look Once) and a conventional CNN, such as LeNet and AlexNet. YOLO is the real-time object detection neural network.

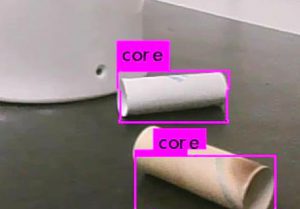

Firstly, we use a pretrained YOLO for object detection and rough classification. Secondly, the CNN classifies object more precisely using outputs of YOLO. The merit of this cascade deep neural network is very fast learning. Because we just use a pretrained YOLO, only learn the data set using LeNet or AlexNet. The result of object recognition is shown in Fig.5. In the WRS2018, we used only YOLO v3 and it could successfully detect the cores with the same training weights in our lab. as shown in Fig.7. Its object recognition accuracy is higher than YOLO v2. However, Yolo v3 cannot estimate a pose, we will use Mask R-CNN for object recognition and semantic segmentation.

Secondly, the grasping point is calculated using point cloud data from the RGB-D sensor. The robot removes the planer and outlier from point cloud data, and calculates the centroid of clustering from point cloud corresponded object image area. This calculation uses Point Cloud Library (PCL). Finally, the robot calculates Inverse Kinematics from object center of gravity and grasp it.

- Hardware

-

- Speech Recognition, Synthesis and Sound Localization

We have developed a speech recognition and a sound source localization system. The speech recognition system uses the Kaldi based gstream server. It is a real-time full-duplex speech recognition server, and it uses a DNN-based model for English trained on the TEDLIUM speech corpus.

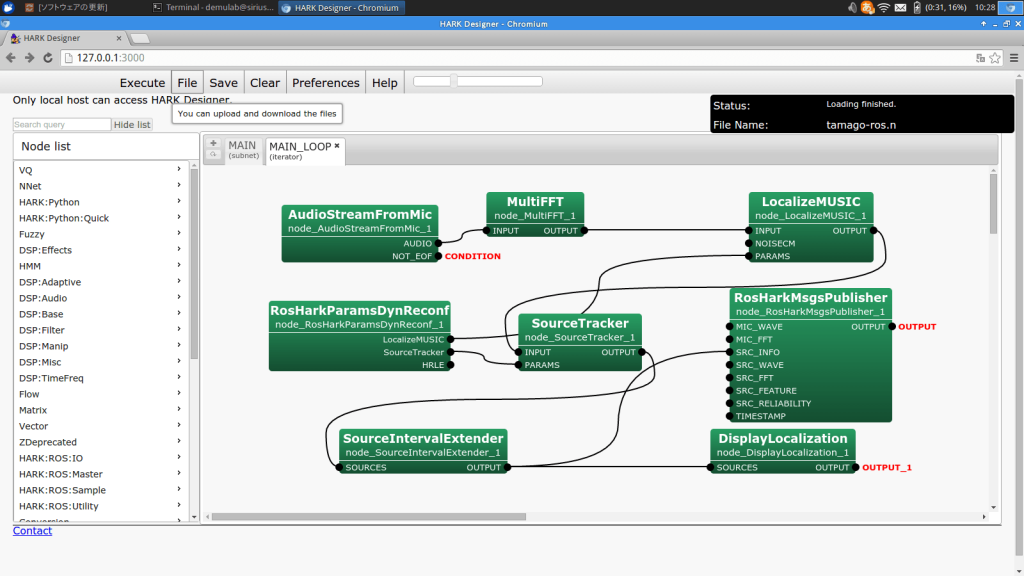

The sound source localization system is implemented using the robot auditory library HARK. The HARK easily be programmed by GUI as shown in Fig.8. The system takes multi-channel speech waveform data from the 8ch microphone array, calculates FFT and estimates the sound source direction by the MUSIC method.

From 2019, we have been developing a new speech recognition system using the WebSpeechAPI (online) and DeepSpeech (offline). For speech synthesis, we use picotts. It is a lightweight ROS package for Text-to-Speech (TTS) generation. It is a wrapper around popular online and offline TTS engines. We use the MaryTTS engine for offline and Microsoft engine for online. For sound localization, ReSpeaker Mic Array V2.0 at Seed Studio is used.

- Simulator

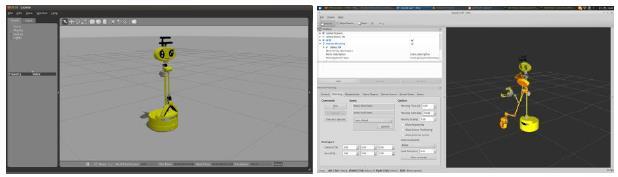

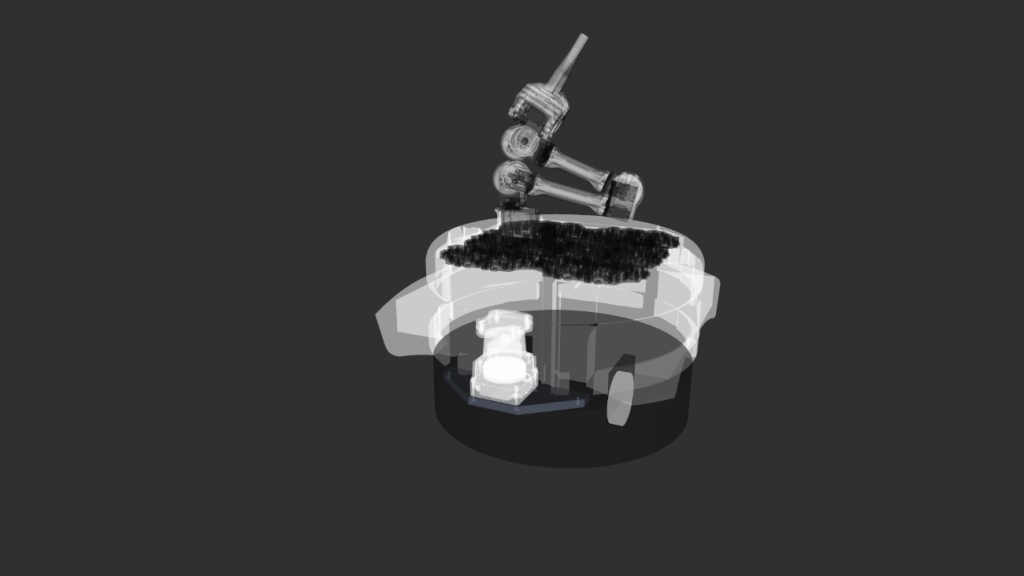

We have developed the Happy Mini and Happy Burger simulator using the Gazebo robot simulator as shown in Fig.9 and Fig.10. To make the 3D Happy Mini model in the simulator, we described the URDF (Universal Robot-ics Description Format) file and imported 3D meshes from the 3D CAD data. MoveIt! is easily applied to the 3D model described by the URDF. - Competition Results

- This results are not only the Happy Robot team, but also the former team name the happy mini and WinKIT. Prof. Demura is an adviser of these team.

- World Robot Summit

- WRS2018, Tokyo, Japan

- Future Convenience Store Challenge

- WRS2018, Tokyo, Japan

- RoboCup

- 2002

- RoboCup 2002, Fukuoka/Busan [Middle Size League] 2nd place

- 2003

- RoboCup Japan Open 2003 [Middle Size League] 1st place

- RoboCup 2003, Padova, Italy [Middle Size League] 2nd place

- 2004

- RoboCup Japan Open 2004 [Middle Size League] 2nd place

- RoboCup 2004, Lisbon, Portugal [Middle Size League] 2nd place

- 2006

- RoboCup Japan Open 2006 [Middle Size League] 3rd place

- 2007

- RoboCup Japan Open 2007 [Middle Size League] 3rd place

- 2009

- RoboCup Japan Open 2009

- Middle Size League: 2nd place

- Humanoid Kids Size: 3rd place

- RoboCup Japan Open 2009

- 2010

- RoboCup Japan Open 2010

- Middle Size League: 2nd place

- Humanoid Kids Size: 2nd place

- RoboCup Japan Open 2010

- 2011

- RoboCup Japan Open 2011 [Middle Size League] 3rd place

- 2012

- RoboCup Japan Open 2012

- Middle Size League: 2nd place

- Humanoid Kids Size: 2nd place

- @Home Real Robot League: Lost in a preliminary round

- RoboCup Japan Open 2012

- 2013

- RoboCup Japan Open 2013

- Middle Size League: 2nd place

- Humanoid Kids Size: 3rd place

- @Home Real Robot League: Lost in a preliminary round

- RoboCup Japan Open 2013

- 2014

- RoboCup Japan Open 2014

- @Home Real Robot League: 8th place

- @Home Simulation League: 3rd place (Team name: Yumekobo Junior)

- RoboCup Japan Open 2014

- 2015

- RoboCup Japan Open 2015

- @Home Real Robot League: 5th place

- @Home Simulation League: 5th place

- RoboCup 2015, Heifei, China

- @Home: 9th place

- RoboCup Japan Open 2015

- 2016

- RoboCup Japan Open 2016

- @Home Education: 1st place

- RoboCup 2016, Leipzig, Germany

- @Home: 8th place

- RoboCup Japan Open 2016

- 2017

- RoboCup Japan Open 2017

- @Home Open Platform League: 4th place

- RoboCup 2017, Nagoya, Japan

- @Home Open Platform: 9th place

- RoboCup Japan Open 2017

- 2018

- RoboCup 2018, Montreal, Canada

- @Home Open Platform: 5th place

- RoboCup 2018, Montreal, Canada

- 2002

[1] Happy mini, our human support robot, is the 3rd place in sympathy and 5th place in design rating in the @Home league in RoboCup2016 Leipzig by the research of Eva Jahn as shown in this web site. Many wonderful robots, such as Pepper, HSR, R1, SocRob, Amigo, Tiago and so on, participated in RoboCup@Home2016.↩