The Happy Robot team official website

- Mission

The Happy Robot team has been participating in the RoboCup@Home league of the RoboCup Japan Open since 2012, and participated in the RoboCup 2015, 2016, 2017 and 2018 world competition. The team got the 9th, 8th, 9th, and 5th places in the competition, respectively. From 2019 to 2022, we could not participate in the world competiton due to the COVID19.

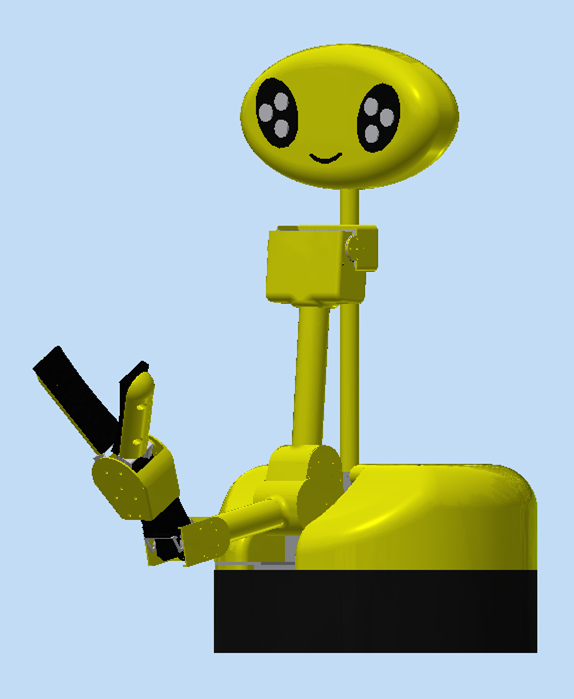

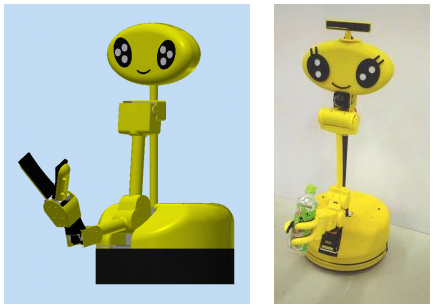

The mission of the Happy Robot team is to create robots that make people happy. Our robots as shown in Fig.1 is designed in the image of a little child with a lively yellow color. We think that the design of home robots should be friendly to the elder-ly and children. Our robots are pioneer in such design concept in the Ro-boCup@Home league since 2015. The software system is based on ROS, and the state of arts frame works such as Detectron2, BlenderProc, OpenCV and so on.

- Robot

(1) Hardware

The first generation: Happy Mini

We developed human support robots for children and the elderly. To foster the research and development, the Demura Research Laboratory has been participating in RoboCup@Home since 2012.

The design concepts of Happy Mini are Kawaii (lovely, cute), simplicity, safety, and usability. Kawaii is the most important aspect of human-robot interaction for Mini. Mini is an infant robot, and the ability is limited as well as other robots in RoboCup. So, Mini needs the help of human, that is why kawaii of appearance and voice is crucial for Mini. The exterior of Mini is roundish and lively yellow as shown in Fig.1. The technical information of these robots is described in our team description paper.

Happy mini (Left: CAD original design, Right: Final real robot)

Happy mini (Left: CAD original design, Right: Final real robot)

Fig.1 Happy Robot Family

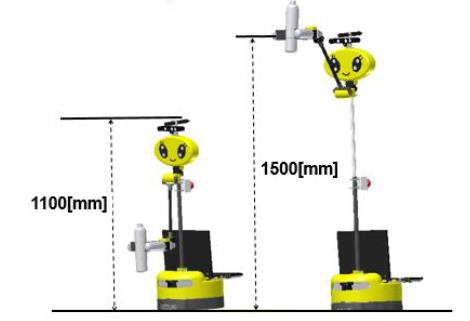

Fig.2 Extendable Torso

Table.1 Specification

| Happy Mini | |

| Height [m] | 1.10~1.50 |

| Width [m] | 0.32 |

| Length [m] | 0.32 |

| Weight [kg] | 10.0 |

| Max speed [m/s] | 0.7 |

| D.O.F | 5 |

The second genration: Happy Bird

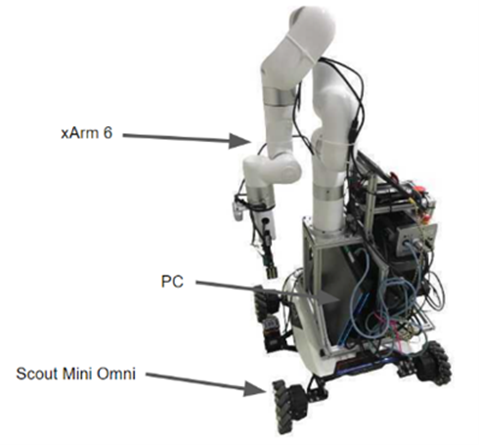

We have been developing a second-generation robot called Happy Bird as shown in Fig.3.

Hardware

- Robot dimensions: height: 1.2 [m] width: 0.55[m], depth: 0.6[m]

- Weight: 49 [kg]

- Base: Scout Mini Omni: Max Speed 2.7[m/s]

- Arm: xArm6: 6Dof payload 5[kg], weight 9.5[kg] reach 0.7[m]

- PC: Dell Alienware R17

Software

- Platform: ROS Melodic

- Navigation: ROS Navigation stack

- Localization and mapping: AMCL and Gmapping

- Object recognition: Detectron2 Mask R-CNN

- Speech recognition: VOSK offline speech recognition API

- Speech generation: MaryTTS

- Arm control: ROS MoveIt!.

Fig.3 Happy Bird

(2) Software

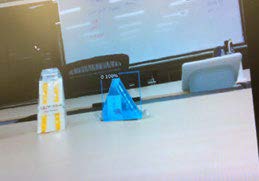

Object recognition was performed using Mask R-CNN trained with automatically generated training data. The system uses Blender, BlenderProc, OpenCV, and Detectron2. BlenderProc is a software that can generate training data using Blender and 3D models. Fig.4 shows examples of generated data. The generalization perfor-mance of Mask R-CNN is improved by data augmentation, i.e., adding noise, random rotating, grayscaling, darkening and brightening and color modification using OpenCV. Fig.5 shows the results of object detection by Mask R-CNN.

Fig.4 Examples of data

Fig.5 Results of object recognition

- Research Contribution

(1) The Fluorescent AR Marker

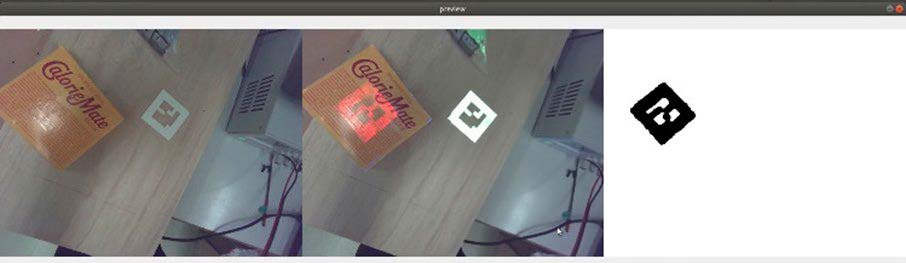

We have developed a 6DoF pose estimation network using a dataset that is automati-cally annotated with the Fluorescent Augmented Reality (AR) Marker as shown in Fig.6. The marker is a transparent film coated with fluorescent paint that glows under ultraviolet (UV) light and is cut to match the texture of the object it is being used on. By switching between visible and UV light, the marker can automatically annotate RGB images, mask images for semantic segmentation, and provide 6DoF pose infor-mation. The Fluorescent AR Marker is transparent to visible light and preserves the texture of the target object, making it possible to automatically annotate 6DoF pose information that is difficult to obtain using other methods. The 6DoF pose estimation network uses the Fluorescent AR Marker to learn the 6DoF pose of the target object and can output the 6DoF information without the marker during inference. 6DoF pose information is crucial for manipulation tasks and has potential future applications.

The Fluorescent AR marker makes it easy to create a dataset containing 6DoF pose information for Deep Learning, which was previously difficult to obtain. This re-search makes a significant contribution to robotics and Deep Learning.

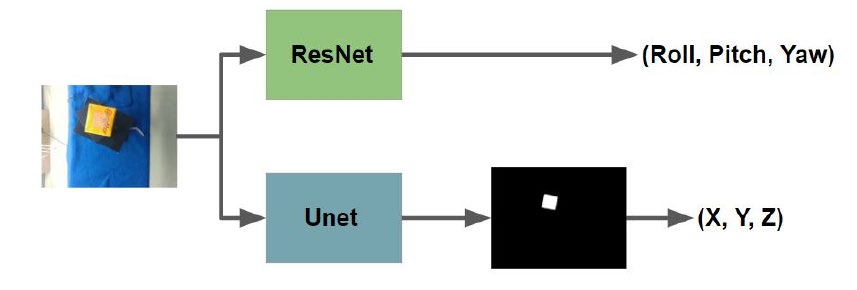

We also proposed a 6DoF pose estimation network that has a capability of learning the 6DoF pose of an object with a Fluorescent AR marker as shown in Fig.7. During inference, the network can output the 6DoF pose of the object without the marker. The average error between the inferred pose and the supervised data is 7.2 [mm], which is similar to that of a traditional AR marker. The ability to estimate 6DoF pose is critical for tasks such as robot manipulation, and future applications are anticipated.

In the future, we plan to apply our proposed method to transparent objects such as plastic bottles and glasses, as well as shiny metals, which are extremely difficult to recognize and estimate their 6DoF pose using existing methods.

Fig.6 The Fluorescent AR Marker: The left image is not irradiated with UV light. The center image is irradiated with UV light. The right image is difference between left image and center image.

Fig.7 A proposed 6DoF network

(2) The jamming gripper with an adsorption mechanism

Japan is facing a serious labor shortage due to the declining birthrate, aging popula-tion, and shortage of workers. This has led many companies to consider the introduc-tion of robots. However, with the recent development of soft robotics, soft grippers that can grasp objects with complex shapes and soft objects such as food, which have been difficult with conventional grippers, are attracting attention in the industrial world. This research aims to develop a soft gripper that can grip a wide variety of objects.

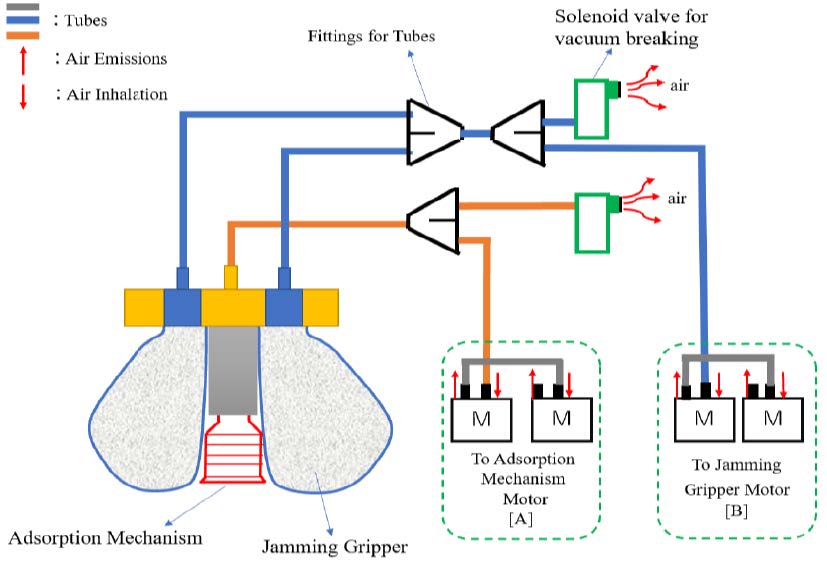

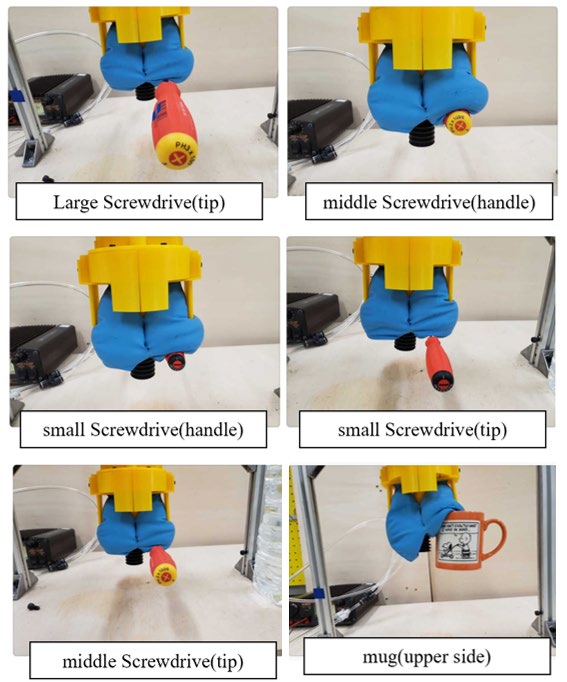

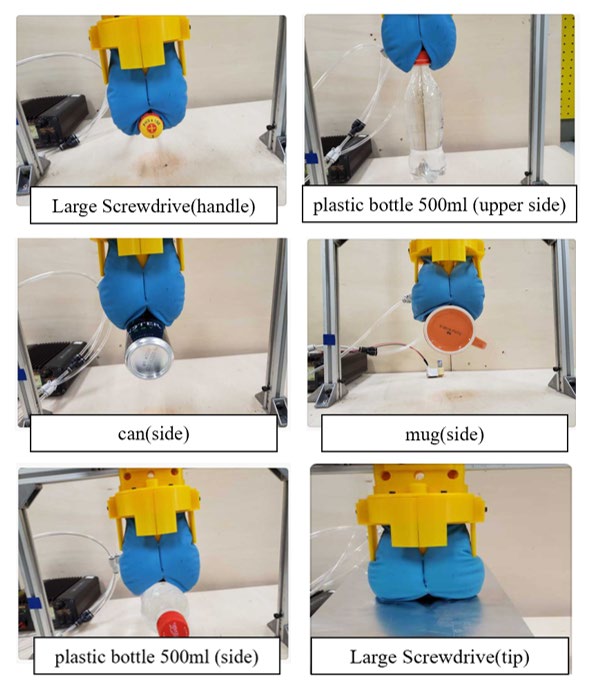

To realize a gripper capable of gripping various objects, we have developed a grip-per with a suction pad attached to the center of a jamming gripper to compensate for the respective problems without losing the advantages of a jamming gripper and a suction gripper as shown in Fig.8. In addition, to enable grasping of various ob-jects, we made it possible to switch between the suction pad and the jamming gripper, and to select the grasping method between the suction pad and the jamming gripper according to the object to be grasped. Fig.9 and Fig.10 show the results of grasping experiments. The gripper can grasp various objects such as a small, middle, large screwdriver, a plastic bottle, a can, a mug cup and an aluminum plate.

Fig.8 Diagram of a jamming gripper with an adsorption mechanism

Fig.9 Grasping with an adsorption pad part

Fig.10 Grasping with a jamming gripper part

- Team Description Paper

- Publications

- Journal Paper

- Ren Matsubara, Kosei Demura: Development of a general-purpose gripper with selectable gripping method by jamming transfer and adhesion (in Japanese), Journal of the Robotics Society of Japan, 2023. (to be published)

- Kosuke Mizutani, Nobuhiro Nagasawa, Kosei Demura: Development of automatic opening and closing shelf for convenience stores in cooperation with collaborative robot, Advanced Robotics, Dec, 2022

-

Shogo Okano, Tatsuhito Makino, Kosei Demura: Fluorescent Texture: Proposal of a 2-3D Automatic Annotation Method for Deep Learning (in Japanese), Journal of the Robotics Society of Japan, 40(1) 71-82, Jan, 2022

- Mizuho Shibata, Kosei Demura, Shinichi Hirai, Akihiro Matsumoto:

Comparative Study of Robotics Curricula, IEEE Transactions on Education, 64(3) 283-291, 2020 - Kosei Demura: Utilization of Middleware in a Virtual Robot Competition Robotics X (in Japanese), Journal of the Society of Instrument and Control Engineers, 57(10) 725-728, 2018

- Book

- Kosei Demura, Yoshinobu Hagiwara, Yasuhiro Masutani, Jeffrey Too Chuan TAN: Introduction to AI Robotics – Building and Learning with ROS2 and Python – (in Japanese), Kodansha Ltd., Publishers, Aug, 2022

- Journal Paper

- Software

- Team

- Adviser: Kosei Demura

- Member:Ryo Hidano (Leader), Yuuki Ishiyama, Masaaki Ida, Takumi Kishimoto, Ren Kawasumi

- Previous participation in RoboCup@Home

- RoboCup Japan Open 2012

- @Home Real Robot League: Lost in a preliminary round

- RoboCup Japan Open 2013

- @Home Real Robot League: Lost in a preliminary round

- RoboCup Japan Open 2014

- @Home Real Robot League: 8th place

- @Home Simulation League: 3rd place (Team name: Yumekobo Junior)

- RoboCup Japan Open 2015

- @Home Real Robot League: 5th place

- @Home Simulation League: 5th place

- RoboCup 2015, Heifei, China

- @Home: 9th place

- RoboCup Japan Open 2016

- @Home Education: 1st place

- RoboCup 2016, Leipzig, Germany

@Home: 8th place

- RoboCup Japan Open 2017

- @Home Open Platform League: 4th place

- RoboCup 2017, Nagoya, Japan

- @Home Open Platform: 9th place

- RoboCup Japan Open 2018

- @Home Open Platform: 3rd place

- RoboCup 2018, Montreal, Canada

- @Home Open Platform: 5th place

- RoboCup Japan Open 2021

- @Home Open Platform: Participated

- @Home Education: 3rd place

- RoboCup Japan Open 2012

That’s all. Thank you!